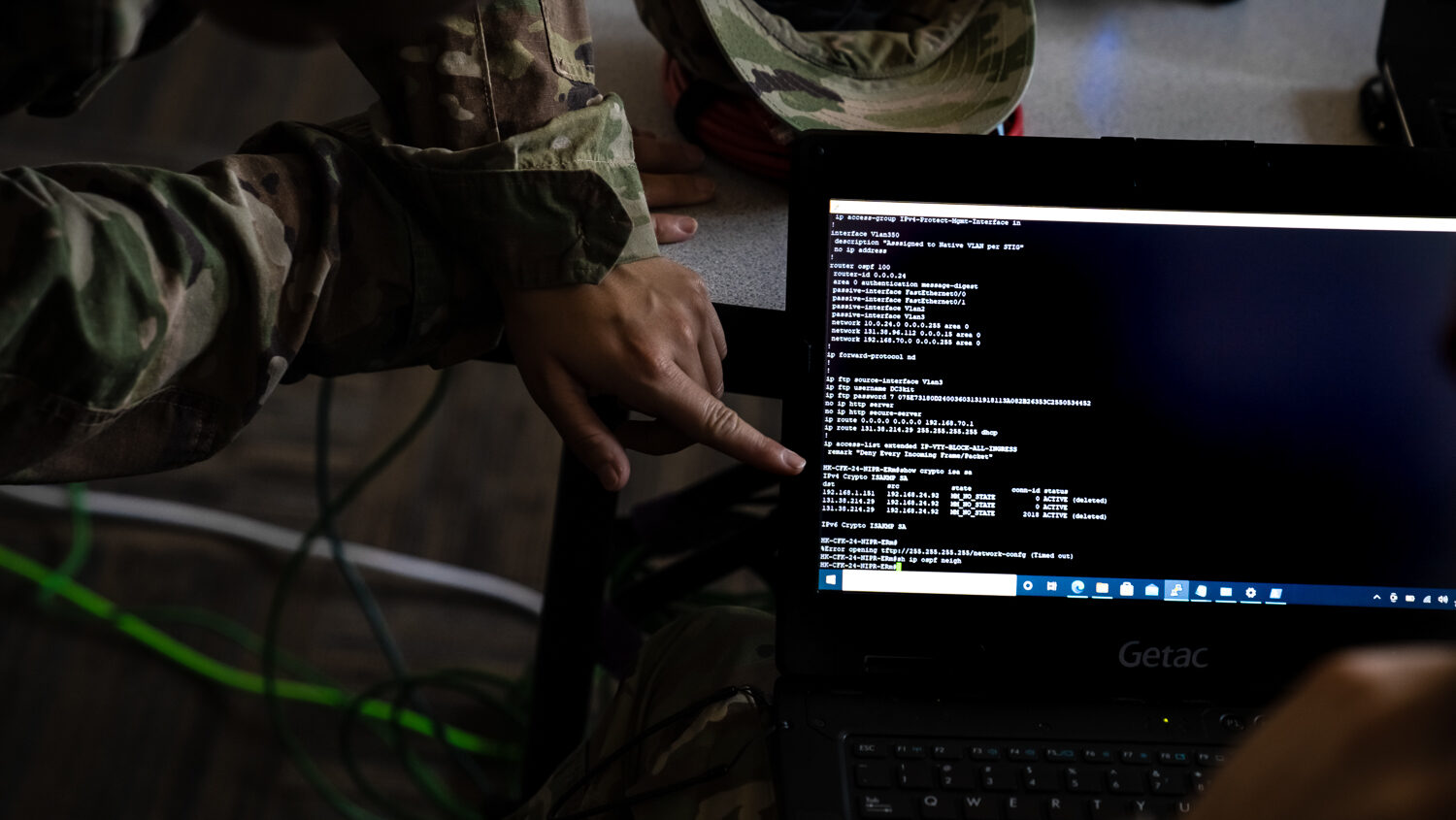

Members of the 56th Air and Space Communications Squadron at Joint Base Pearl Harbor-Hickam operate cyber systems at Alpena Combat Readiness Training Center, Alpena, Michigan, July, 12, 2021. (U.S. Air Force photo by Tech. Sgt. Amy Picard)

WASHINGTON — Generative artificial intelligence startup Ask Sage recently announced it had deployed its genAI software to the Army’s secure cloud, cArmy. The press release touted a host of processes the new tech could automate, such as software development, cybersecurity testing, and even parts of the federal acquisition system — “to include drafting and generating RFIs, RFPs, scope of work, defining requirements, down-selecting bidders and much more.”

That last litany of tasks may prove one of the most delicate the Department of Defense has yet asked algorithms to tackle in its now year-long and deeply ambivalent exploration of what’s called genAI. It’s also an area where both Ask Sage and the Army told Breaking Defense they will be watching closely, monitoring the effectiveness of several layers of built-in guardrails.

Automating acquisition is an especially ambitious and high-stakes task. Protests by losing bidders are so common that program managers often build a three-month buffer into their schedules to account for a “stop work” order while GAO investigates. Flaws in official documents — such as the “RFIs [Requests For Information], RFPs [Requests For Proposal], scope of work, [and] requirements” mentioned in the Ask Sage release — can lead to court battles that disrupt a program. And early experiments in using genAI for matters of law have been less than successful, with multiple lawyers facing sanctions after using ChatGPT and similar software for legal research only to have it “hallucinate” plausible but completely fictional precedents.

RELATED: Pentagon should experiment with AIs like ChatGPT — but don’t trust them yet: DoD’s ex-AI chiefs

The first and last line of defense, Ask Sage and Army officials said, is the human using the technology. But there are also algorithmic protections “under the hood” of the software itself. Instead of simply consulting a single Large Language Model (LLMs) the way ChatGPT does, Ask Sage uses multiple programs — not all of them GenAI — as checks and balances to catch and correct mistakes before a human ever sees them.

“All contracts will still go through our legal process and humans will remain in the loop,” said the Army, in a statement from the office of the service Chief Information Officer to Breaking Defense following Ask Sage’s announcement last week. “We are exploring ways to use LLMs to optimize language in contracts [because] LLMs can analyze vast amounts of contract data and understand requirements for compliance with complex legal frameworks. This cuts down on the human-intensive work required to research and generate the language and, instead, uses LLMs to get to an initial solution quickly; then humans work with the LLMs to refine the output. … The human workload can then focus on applying critical thinking.”

The old arms-control principle of “trust but verify” applies, said Nicolas Chaillan, the former chief software officer of the Air Force, who left the service in frustration over bureaucracy in 2021 and founded Ask Sage in a burst of genAI enthusiasm just last year. “It’s always reviewed by a human,” he told Breaking Defense in an interview. “The human is going to read the whole thing.”

RELATED: SOCOM acquisition chief: AI will be ‘key to every single thing’

But anyone who’s ever mindlessly clicked “Okay” on pages of tedious privacy policies and user agreements knows very well that humans do not, in fact, always read the whole thing. AI ethicists and interface designers alike wrestle with a problem known as automation bias, in which everything from poor training to subtle perceptual cues — like highlighting a potential hostile contact in threatening red rather than cautionary yellow — can lead operators to blindly trust the machine instead of checking it.

So Ask Sage does try very hard to build error correction, hallucination detection, and other safeguards into the software itself, Chaillan said.

AI Checks And Balances

First and foremost, Chaillan told Breaking Defense, the Ask Sage software doesn’t just consult a single Large Language Model. Instead, it’s a “model-agnostic” intermediary or “abstraction layer” between the human user and their data, on the one hand, and a whole parliament of different AI models — over 150 of them.

“You don’t want to put all your eggs in one basket,” Chaillan said. “We’re never getting locked into Open AI or Google” or any other specific “foundation model” developer, he said. “We can add models and even compare models and see which ones behave the best,” even picking different models as for different tasks.

For some functions, there are also non-AI algorithms — old-school, predictable, determnistic IF-THEN-ELSE code — that doublecheck the AI’s work.

“I always want to have .. those right guard rails — and it took way more than just genAI to solve,” Chaillan emphasized. “So it’s a mix of genAI with traditional code and special training of the models and guards rails to get to a right answer. You couldn’t do it just with genAI.”

“We make it self-reflect,” he said. “When it generates a piece of document, we then pass it again to another model to say, ‘Hey, assess this language. What could be the potential legal risks? Is this compliant and following the FARS and DFARS requirements?’”

“It’s not just a ‘one pump and go’ like you do on Chat GPT, where you type a piece of text and you get a response,” he said.

In fact, even the human input is constrained and formalized to prevent errors: “Writing an RFI is not just one prompt,” he went on. The software guides the user through a whole checklist of questions — what type of contract is best here? What’s the scope of work? How should we downselect to a winner? — before generating the output.

That output can include not only the draft document, Chaillan said, but also commentary from the AI highlighting potential weak points where the algorithms were unsure of themselves. (That’s a far cry from the brazen self-confidence of many chatbots defending their errors).

“They get a report before reviewing the document to say, ‘Hey, you should pay attention to these sections. Maybe this isn’t clear,’” he said. “You give them a list of things that could be potential issues.”

In fact, Chaillan argued, the AI is often better at spotting problems than human beings. For software development, “the code is actually better than it is when written by humans,” he said, “with the right models, the right training, and the right settings.”

The software is even superior, he claimed, when it comes to parsing legal language and federal acquisition regulations — which, after all, are not only voluminous but written in convoluted ways no human brain can easily understand. “Humans can’t remember every part of the FARS and DFARS — and there’s conflicting things [in different regulations],” he said. “Ask 10 people, you’d get 10 different answers.”