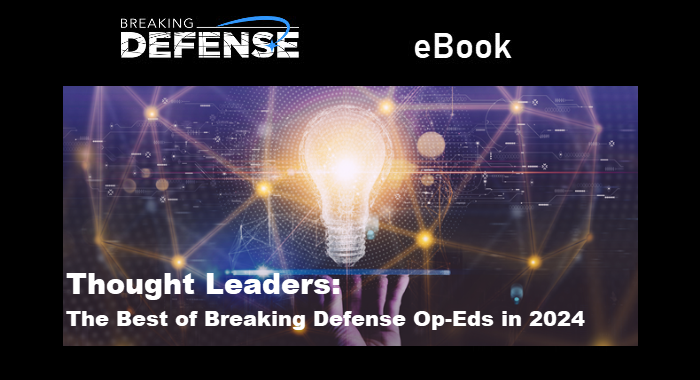

A ground crew preps the X-62 Variable In-flight Simulator Aircraft (VISTA) at Edwards Air Force Base to fly on Nov. 30, 2023 (Michael Marrow/Breaking Defense)

WASHINGTON — High in the skies above the desert sands of Edwards Air Force Base in September 2023, a team composed of members of the Air Force, DARPA and industry had an AI controlled F-16 and a human-controlled one maneuver against each other in dogfights — a feat the team is claiming to be a “world first.”

In the span of a couple weeks, a series of trials witnessed a manned F-16 face off against a bespoke Fighting Falcon known as the Variable In-flight Simulator Aircraft, or VISTA. A human pilot sat in the VISTA’s cockpit for safety reasons, but an AI agent did the flying, with results officials described as impressive — though they declined to provide specific detail, like the win/loss ratio of the AI pilot, due to “national security” reasons.

The AI agents employed by the team “performed well in general,” meaning that “our ongoing research is a clear signal that we’re moving in the right direction,” Air Force Test Pilot School Commandant Col. James Valpiani said in a briefing with reporters today.

The trials mark a signature victory for DARPA’s Air Combat Evolution (ACE) program, which is seeking to increase human trust in autonomous platforms that the military services hope to deploy in combat. The Air Force’s Collaborative Combat Aircraft (CCA) effort, for example, could start producing loyal wingman drones that fly and fight alongside manned jets before the end of the decade.

A key problem for manned-unmanned teaming is ensuring autonomy can be trusted to safely operate an aircraft, particularly through harrowing maneuvers inherent in a dogfighting scenario.

So, the ACE team toiled to encode safety algorithms for the AI pilot, sometimes iterating flight control software as quickly as within a day’s time, according to a video released by DARPA. Officials eventually gained confidence to employ the VISTA in real-world dogfighting scenarios against an F-16, and while fail safes were put in place to prevent disaster, the VISTA never violated human norms for safe flight, the DARPA video says.

Officials emphasize that AI’s ability to dogfight is a spectacular feat, but the capability is a means to a bigger end: demonstrating that AI can be trusted to fly a fighter jet, paving the way to even greater airborne autonomy testing.

“Asking the question of who won [the dogfights] doesn’t necessarily capture the nuance of the testing that we accomplished,” said DARPA ACE Program Manager Lt. Col. Ryan Hefron. According to Valpiani, the larger point of the program is “how do we apply machine learning to combat autonomy.”

One concern highlighted by Valpiani is that there is currently no pathway to achieve flight certification for an aircraft controlled by machine learning (ML) — with ML-controlled flights being a fraught concept in years past, particularly as a machine’s reasoning can be opaque. “The potential for machine learning in aviation, whether military or civil, is enormous,” he said. “And these fundamental questions of how do we do it, how do we do it safely, how do we train them, are the questions that we are trying to get after.”

The VISTA aircraft has been flying since the 1990s, but was more recently outfitted with special tech to convert the jet into an autonomy testbed and has since served as a critical proving ground to mature AI software. The Air Force is working to adapt more F-16s through a program known as VENOM for a similar role as it seeks to expand AI testing.

Looking ahead, Hefron said a milestone in the cards for the ACE program is demonstrating collaborative AI dogfighting between a human and AI pilot, as well as “incorprat[ing]” lessons into future programs — whether a follow-on effort for DARPA or the services’ own drone endeavors. The VENOM and VISTA aircraft will also complement each other with “their own unique place[s]” as testbeds, he said.

Valpiani said he expects the autonomous capabilities derived from programs like ACE can “augment but not necessarily replac[e] human functions,” highlighting the development of the Auto Ground Collision Avoidance System as one example. Longer-term questions about AI supplanting human pilots, however, was a “speculative, almost philosophical” question better left for Air Force planners who decide on policy.

“We think that there’s tremendous promise in the way that we have approached this and the lessons that we’re learning,” Valpiani said, “and we look forward to continuing to advance in this space going forward.”