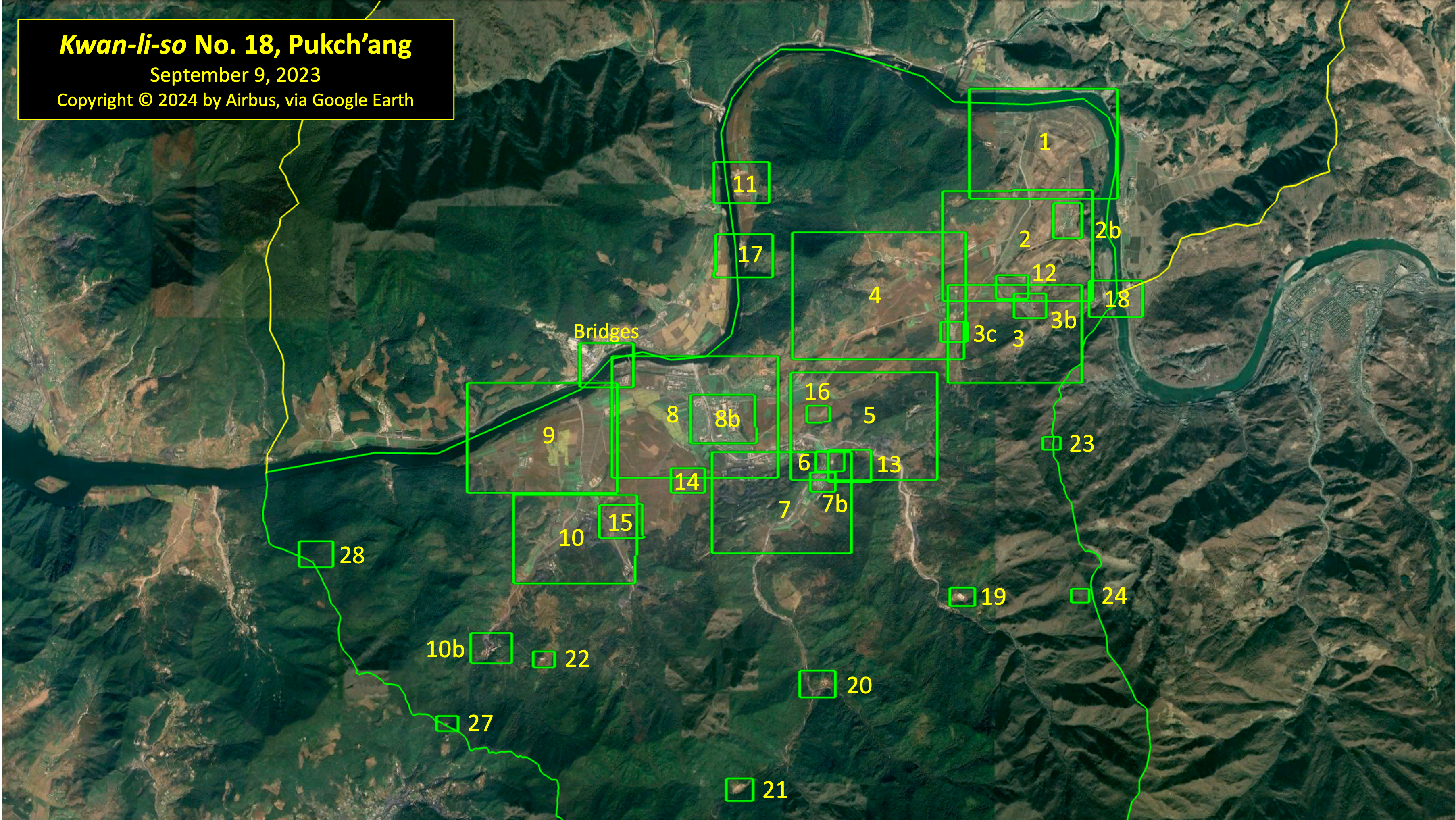

Overview of Kwan-li-so No. 18, September 9, 2023 (Copyright © 2024 by Airbus, via Google Earth, via NGA’s Tearline)

WASHINGTON — The director of data and digital innovation at the National Geospatial Intelligence Agency said the NGA has started training artificial intelligence algorithms on its unique trove of visual and textual data.

This data is “an AI gold mine,” said Mark Munsell. That’s not just because it consists of large amounts of well-labeled, well-organized, and carefully vetted data, accumulated over decades by the intelligence agency tasked with compiling and analyzing geospatial data for policymakers from the president on down. It’s also because this data is what experts call multi-modal, combining images with text descriptions.

Contrast that to how GenAI companies are feverishly scraping everything from Reddit posts to YouTube captions in their desperate quest for training data — and that’s all pure text, without any ability to cross-reference other kinds of sources.

“We’re in the early days of some really cool experiments,” Munsell told the annual INSA Intelligence & National Security Summit on Tuesday. “Those experiments involve taking the visual record of the Earth that we have from space … and merging it with millions and millions of finely curated humans’ reports about what they see on those images.”

“We’re sitting on two big sets of data, a gold mine of data, where we have images that nobody else in the world has, and … experts that have described what’s on those images in expert language and text,” Munsell continued. “We’re really looking forward to this multi-modal experiment, where we bring those two things together.”

RELATED: GIDE goes wide: Defense AI chief seeks host of industry players for global battle network

Large Language Models like ChatGPT erupted into public view last year and have fired fierce debate over AI ever since. Skeptics point to their tendency to “hallucinate” convincing falsehoods and misinformation. Enthusiasts argue that — properly safeguarded — they can revolutionize knowledge-based tasks that once required tedious clerical work. Some well-credentialed commentators even claim that LLMs are on the verge of superhuman “artificial general inteligence.”

Unlike humans, however, LLMs operate purely on text: They train on text, take input in text, and output answers as text. Other forms of generative artifcial intelligence can correlate text and imagery well enough to turn users’ written prompts into pictures or even video.

But many in industry have already been working on the next frontier: multi-modal AI. The crucial capability is to cross-reference different types of information on the same thing, like an image or video with the associated caption describing it in words, much the way a human brain can associate an idea or memory with information from all the senses.

Multi-modal AI can even work with senses that human beings don’t have, like infra-red imagery, radio and radar signals, or sonar. “That enables a lot of avenues that would have been closed to us,” said DARPA program manager William Corvey, speaking at the same INSA panel as NGA’s Munsell. “[Imagine] cross-modal systems that can reconcile visual and linguistic information and other kinds of modalities of sensors that might be available to a robot but aren’t to a human being.”

Modern AI algorithms have proven perfectly capable of working with images, video, and all sorts of sensor data, not just text, because they can abstract any and all of them into the same mathematical representations.

“You can start to knit together reality in these new ways and induce these alignments across what were previously completely different systems,” Corvell continued, “and that’s all because these models for all of their expensive training turn out to be really parsimonious representations of reality. They just take sort of everything and squish it into a panini press, and then you can insert that sandwich that you have, basically, into any number of different meals.”

The hard part, it turns out, is getting the data cleaned, curated, and correlated enough for the AI to ingest it in the first place. Many popular “AI” systems actually rely on large numbers of of humans working long hours for low wages to click checkboxes on images and videos. Sometimes giving the humans involved even develop PTSD as they try to moderate hateful and violent content.

“We use hundreds, maybe even thousands, of humans to train these models… and then we have hundreds or thousands of humans that are giving feedback to the models,” Munsell told the INSA audience. “You gotta ask yourself at some point, what are we doing here?”

So it’s a tremendous advantage when an organization has access to a large amount of data that highly trained humans have already spent decades analyzing, verifying, cataloging, and commenting on. But archives like NGA’s are far too huge for any one human mind to remember everything. AI opens up the possibility of training algorithms on the data and then being able to ask them to spot patterns, resemblances, and anomalies.

“We’ll be able to ask questions, historic questions,” Munsell said. “‘Hey, AI? Have you ever seen this particular activity, this kind of object, in this part of the world?’”

“What a cool thing that will be for for our country,” he enthused.

Munsell’s ultimate boss, NGA chief Vice Adm. Frank Whitworth, said at another panel today that the agency is already using AI to “triage” some of the workload of imagery analysis, but will continually need an AI “edge” to keep up as more and more data comes in from space-based sensors.

The stakes are high to get it right, Munsell emphasized, saying an expert human should always be doublechecking the AI, especially if a report might be used for targeting a military strike.

“The visual is the ultimate positive identification for our customers,” he said. “In many cases they’re making, they’re acting upon that positive identification, they’re acting upon the visual identity and the geolocation of what we’re providing. And when artificial intelligence provides that, you have to take those extra steps to make…that thing is 100% correct.”

At NGA, he said, “literally for us, seeing is believing.”

Lee Ferran contributed to this story.